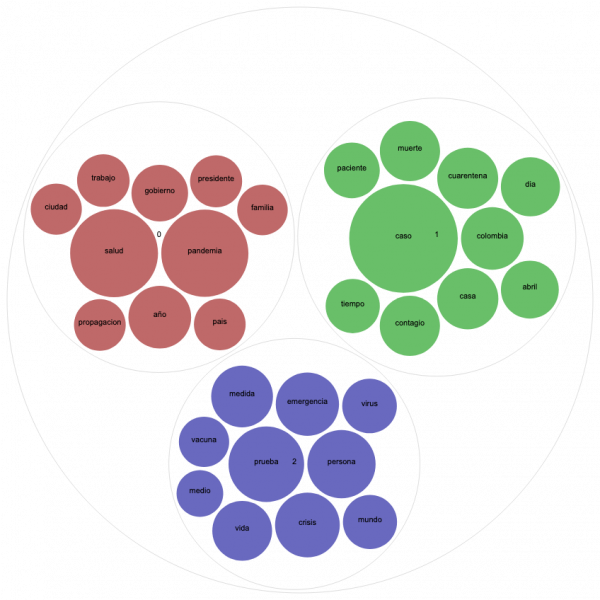

Este proyecto, llevado a cabo por una comunidad de académicos y estudiantes de la Universidad de Miami (EEUU) Y CONICET (Argentina), pretende explorar las narrativas digitales que residen detrás de los datos sobre la COVID-19 desde una perspectiva humanista y bilingüe. Nuestro objetivo es analizar los datos procedentes de recursos digitales y Twitter, a través de métodos cualitativos y cuantitativos propios de las Humanidades Digitales.

Acceso a nuestro Corpus de Twitter

Acceso a nuestro Corpus de Twitter

Creamos un corpus con todos los tweets en los que se puede buscar por palabras, fechas y país!

Más información

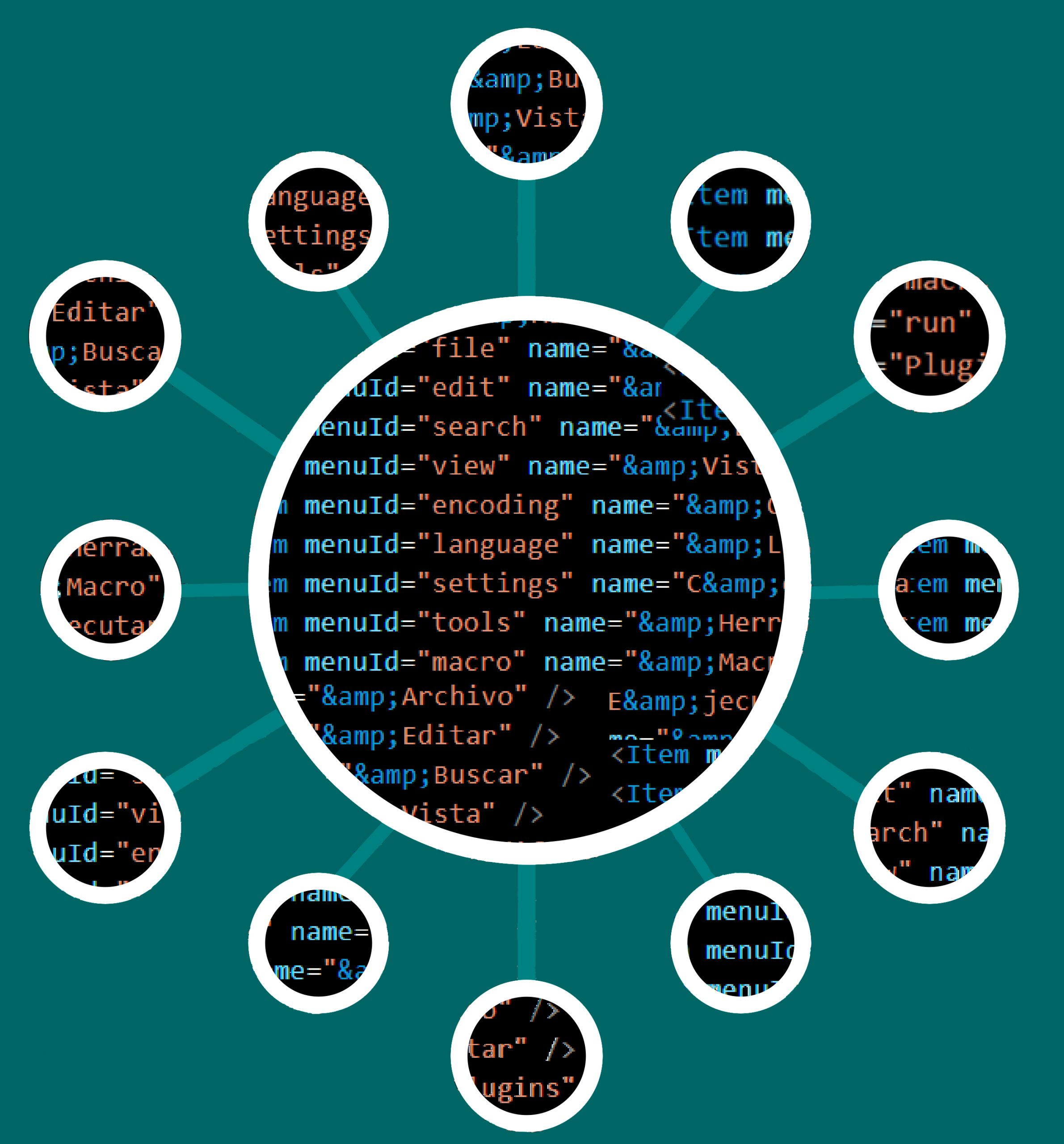

Acceso a nuestro repositorio de GitHub

Acceso a nuestro repositorio de GitHub

Nuestro dataset en GitHub recoge los Tweets ID desde el 25 de abril hasta el día de hoy. Aprende a descargarlos e hidratarlos!

Más información

Acceso a nuestra API

Acceso a nuestra API

Accede a nuestra API (Application Program Interface) para descargar una colección de tweets por fecha (desde el 25 de abril hasta hoy), por país.

Más información

Explora nuestros datos

Acceso a nuestro Corpus de Twitter

Acceso a nuestro Corpus de Twitter

Creamos un corpus con todos los tweets en los que se puede buscar por palabras, fechas y país!

Más información

Acceso a nuestro repositorio de GitHub

Acceso a nuestro repositorio de GitHub

Nuestro dataset en GitHub recoge los Tweets ID desde el 25 de abril hasta el día de hoy. Aprende a descargarlos e hidratarlos!

Más información

Acceso a nuestra API

Acceso a nuestra API

Accede a nuestra API (Application Program Interface) para descargar una colección de tweets por fecha (desde el 25 de abril hasta hoy), por país.

Más información

Lee nuestro blog:

- How to run the coveet.py scriptThe Coveet.py is hosted in our GitHub repository of the Digital Narratives of Covid-19 project. In the main page of the repository, https://github.com/dh-miami/narratives_covid19, there are two different buttons that allow to run a Binder environment: one of them launches the… Read more: How to run the coveet.py script

- Reflections on quantified data: #ScholarStrike in the context of COVID-19

Although the COVID-19 pandemic created a truly shared global context for the first time in years, it soon began to coexist with the local reality of each country. Twitter, as expected, was no stranger to this, and certain hashtags soon… Read more: Reflections on quantified data: #ScholarStrike in the context of COVID-19

Although the COVID-19 pandemic created a truly shared global context for the first time in years, it soon began to coexist with the local reality of each country. Twitter, as expected, was no stranger to this, and certain hashtags soon… Read more: Reflections on quantified data: #ScholarStrike in the context of COVID-19 - Pensar los datos cuantificados: #ScholarStrike en el contexto de la COVID-19

Si bien la pandemia de COVID-19 impuso por primera vez en años un contexto global compartido, este pronto comenzó a convivir con la coyuntura local de cada país. Twitter, como es esperable, no fue ajeno a ello, y pronto comenzaron… Read more: Pensar los datos cuantificados: #ScholarStrike en el contexto de la COVID-19

Si bien la pandemia de COVID-19 impuso por primera vez en años un contexto global compartido, este pronto comenzó a convivir con la coyuntura local de cada país. Twitter, como es esperable, no fue ajeno a ello, y pronto comenzaron… Read more: Pensar los datos cuantificados: #ScholarStrike en el contexto de la COVID-19