At the end of April we started to get familiarized with the Twitter API and asking how to capture the public conversations that are happening in this social media network.

We quickly understood we needed to focus on a plan and method for organizing our corpus, accomplishing our objectives, and dividing the different tasks among our team members.

Datasets in English are very numerous (see post “Mining Twitter and Covid-19 datasets” from April 23rd, 2020). In order to start with a more defined corpus, we decided to focus on Spanish datasets, in general and per areas. We also wanted to give a special treatment to the South Florida area and approach it from a bilingual perspective, due to its linguistic diversity, especially in English and Spanish. With this in mind, a part of the team analyzes public conversations in English and Spanish, and focuses on the area of South Florida and Miami. While the CONICET team is in charge to explore data in Spanish, namely from Argentina.

To enlarge our dataset, we have decided to harvest as well all tweets in Spanish, and to create specific datasets for other parts of Latin America (Mexico, Colombia, Perú, Ecuador), and Spain. For the sake of organization of our corpus, we built a relational database that collects all information connected to these specific tweets and that automatically ingest hundreds of thousands of tweets a day.

We have different queries running, which correspond to the datasets in our ‘twitter-corpus‘ folder in GitHub. In short, there are three main types of queries:

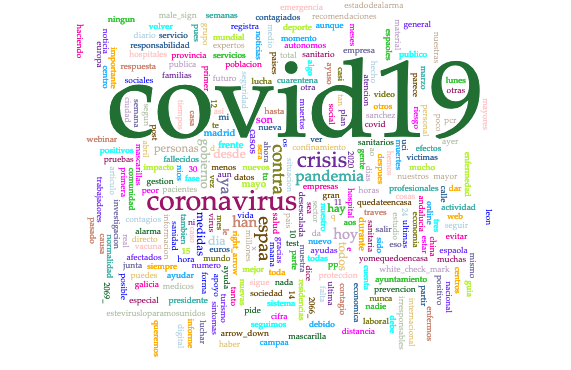

- General query for Spanish harvesting all tweets which contain these hashtags and keywords:

covid,coronavirus,pandemia,cuarentana,confinamiento,quedateencasa,desescalada,distanciamiento social - Specific query for English in Miami and South Florida. The hashtags and keywords harvested are:

covid,coronavirus,pandemic,quarantine,stayathome,outbreak,lockdown,socialdistancing. - Specific queries with the same keywords and hashtags for Spanish in Argentina, Mexico, Colombia, Perú, Ecuador, Spain, using the tweet geolocalization when possible and/or the user information.

Folders are organized by day (YEAR-MONTH-DAY). In every folder there are 9 different plain text files named with “dhcovid”, followed by date (YEAR-MONTH-DAY), language (“en” for English, and “es” for Spanish), and region abbreviation (“fl”, “ar”, “mx”, “co”, “pe”, “ec”, “es”):

dhcovid_YEAR-MONTH-DAY_es_fl.txt: Dataset containing tweets geolocalized in South Florida. The geo-localization is tracked by tweet coordinates, by place, or by user information.dhcovid_YEAR-MONTH-DAY_en_fl.txt: This file contains only tweets in English that refer to the area of Miami and South Florida. The reason behind this choice is that there are multiple projects harvesting English data, and, our project is particularly interested in this area because of our home institution (University of Miami) and because we aim to study public conversations from a bilingual (EN/ES) point of view.dhcovid_YEAR-MONTH-DAY_es_ar.txt: Dataset containing tweets geolocalized (by georeferences, by place, or by user) in Argentina.dhcovid_YEAR-MONTH-DAY_es_mx.txt: Dataset containing tweets geolocalized (by georeferences, by place, or by user) in Mexico.dhcovid_YEAR-MONTH-DAY_es_co.txt: Dataset containing tweets geolocalized (by georeferences, by place, or by user) in Colombia.dhcovid_YEAR-MONTH-DAY_es_pe.txt: Dataset containing tweets geolocalized (by georeferences, by place, or by user) in Perú.dhcovid_YEAR-MONTH-DAY_es_ec.txt: Dataset containing tweets geolocalized (by georeferences, by place, or by user) in Ecuador.dhcovid_YEAR-MONTH-DAY_es_es.txt: Dataset containing tweets geolocalized (by georeferences, by place, or by user) in Spain.dhcovid_YEAR-MONTH-DAY_es.txtThis dataset contains all tweets in Spanish, regardless of its geolocation.

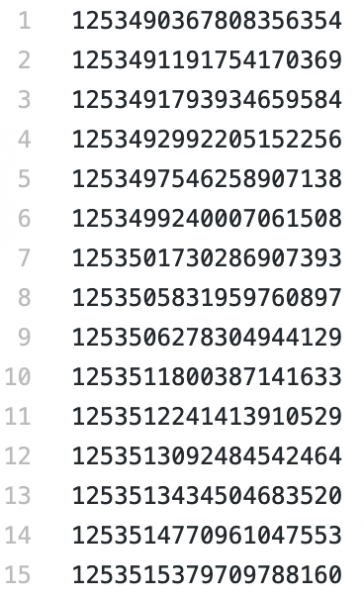

As of today May 23rd, we have a total of :

- Spanish from South Florida (es_fl): 6,440 tweets

- English from South Florida (en_fl): 22,618 tweets

- Spanish from Argentina (es_ar): 64,398 tweets

- Spanish from Mexico (es_mx): 402,804 tweets

- Spanish from Colombia (es_co): 164,613 tweets

- Spanish from Peru (es_pe): 55,008 tweets

- Spanish from Ecuador (es_ec): 49,374 tweets

- Spanish from Spain (es_es): 188,503 tweets

- Spanish (es): 2,311,482 tweets

We do not include retweets, only original tweets.

The corpus consists of a list of Tweet Ids. As a way of obtaining the original tweets, you can use the “Twitter hydratator” which takes the id and download for you all metadata in a csv file.

We started collecting our dataset on April 24th, 2020. For prior dates (January – April 24th), we hope to use the PanaceaLab dataset, since it is one of the few that collects data in all languages, and we expect achieve this in the next couple of months.

We have released a first version of our dataset through Zenodo: Susanna Allés Torrent, Gimena del Rio Riande, Nidia Hernández, Romina De León, Jerry Bonnell, & Dieyun Song. (2020). Digital Narratives of Covid-19: a Twitter Dataset (Version 1.0) [Data set]. Zenodo. http://doi.org/10.5281/zenodo.3824950